What’s Computer Vision anyway?

When I hear the term “computer vision”, the first thing that comes to mind is a computer with eyes that enable it to have a sense of vision. Although this is not the exact definition the computer science industry would agree with, my train of thought is on the right path. Computer vision is a subfield of computer science that concerns itself with the problem of matching up how humans see and how computers see. I know what you’re thinking- computers can’t see! That’s precisely the issue! However, after a few weeks in CS 4476 at Georgia Tech, I have begun to learn a few things about how the scientific community has worked to solve this disconnect between machines and humans. In this reflection, I will present a brief walkthrough of my experience completing CS 4476 Project 1 and some of its important concepts with corresponding pictures. If you enjoy optical illusions, get ready for hybrid images!

There is a common misconception among people outside of the computer science (CS) community that computers are extremely smart and adept- the reason being that computers can do difficult tasks at lightning speed while a human would likely take much longer. This is FALSE! Everything a computer “knows” how to do, it was taught how by a human. Fields like artificial intelligence and machine learning have been wondering how to reflect human cognitive functions like thinking, feeling, and perceiving onto machines. Computer vision is no different- we want to know how to make a computer see the same way a human sees.

My experience in CS and Project details

When I started this class this semester, I was just as intimidated as I was walking into the Machine Learning course last year. I did end up surviving that terrifying ML class although I still have nightmares about the assignments. Most CS courses at Georgia Tech are known to be intimidating to me with all of the complex math and science thrown around; computer vision was no exception. The first project, titled Convolution and Hybrid Images, required students to write an image filtering function in order to create hybrid images. What does all this mean? Allow me to explain as best I can without going into intense, scary detail.

Our project was broken up into three parts: Image Filtering, Hybrid Images with PyTorch, and understanding input/outputs in PyTorch. The first section involved using Gaussian Kernels in order to blur the images given in the prompt: to do this, we had to implement concepts from linear algebra to create vectors and statistics to manipulate variables like mean, standard deviation, and covariance of the Gaussian. The term kernel in this context does not have anything to do with popcorn, although that was my first thought too. A kernel in computer science is the core of any operating system that provides basic functions to every other part of the system.

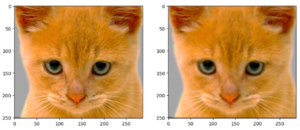

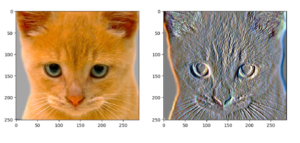

Using the Gaussian filter, we were able to see the image filtering function we wrote in action, blurring an image of a cute cat. See how the cat on the right is ever-so-slightly blurry?

Next, we implemented something called a Sobel Filter, which uses the Sobel operator to calculate the gradient of image intensity at each pixel. In terms of image altering, here is what happened to our cat picture after applying the Sobel Filter:

The real fun begins…

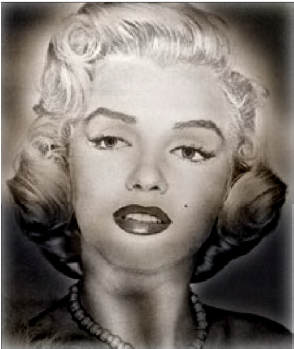

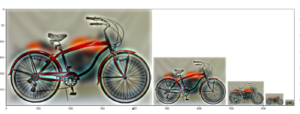

My favorite part of this entire assignment was the hybrid image portion. A hybrid image is the combination of a low-pass filtered version of one image and a high-pass filtered version of another image. Low and high pass filtering refers to cutting out either the low or high frequencies in an image and only leaving one or the other. You might have heard about frequency in sounds, but what is frequency in an image? The frequency of an image is basically the rate of change of intensity values- if an image has high frequency, the pixels move from one pixel to the next quickly. In low frequency, the pixel’s color values share similar hues since the pixels move slowly to the next pixel. Hybrid images remind me of optical illusions because the eye sees one image from looking near the screen and sees the other image when looking from a far distance. The way that the image filtering sort of tricks the eye into seeing two different images in “one” image is one of the things that makes computer vision so cool! Some more hybrid image examples can be seen below:

Hybrid images are created by first calculating the low frequencies of both images being used. Then we can find the high frequency of one image by subtracting its low frequency value from all the frequencies in the image. This gives us the low frequency of image 1 and the high frequency of image 2- summing these two values gives us the beautiful hybrid images seen above.

Conclusion

Although computer science can be daunting with its vast library of tools and functions, going through the resources bit by bit (no pun intended) and using the help of the class TAs, I was able to complete Project 1! What I learned from this project besides the actual content was that computer vision functions take a long time to run on old computers and that Piazza is one of the best tools ever utilized at Tech. Overall, I had fun making the hybrid images though it took me a while to figure out exactly how. The key to computer science is patience, asking questions, and not giving up when errors keep bombarding your terminal.