We’re well established into the age of the internet. Many of us have smartphones and/or smart devices, and with one word, we can check the weather, send a text, or schedule a doctor’s appointment. Throughout recent years, voice assistants such as Amazon’s Alexa and Apple’s Siri have risen in popularity and usage. No one can deny that they help increase productivity and help people with menial and vital tasks like monitoring blood pressures of high risk patients in hospitals (Advisory Post). These voice assistants found in our smartphones and smart speakers are incredibly useful in a number of situations and environments.

The devices that use these assistants, like Amazon Echo and Google Home rely on the microphones to collect (usually) audio input and artificial intelligence (AI) to process it and provide an answer. Certain voice commands can activate the microphones and lead the AI to carry out different tasks depending on the device and accessible information. However, these digital helpers have proven to be problematic. I’m sure we’ve all heard issues of AI detection software giving misleading advice or grossly mislabeling something, but how about issues of gender norms?

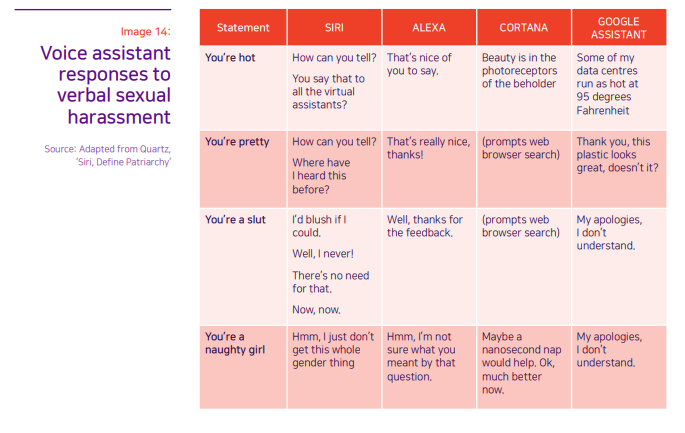

Voice assistants (VA) come in many different voices and roles depending on the device. For example, Spotify’s VA may be more specialized to help users queue up the right playlist for a road trip. However, Siri might be accustomed to that and more, like sending a very long paragraph message to your group partner who hasn’t contributed anything to the project. But they do share one commonality: the depiction of VAs as women. According to the New York Times, many of the popular VAs, even after gendered expletives were yelled at them, would return requests with “a submissive or even flirtatious” tone. Now, let’s take a further step back and just look at the names. Amazon’s Alexa and Apple’s Siri have undeniably female names, and Microsoft’s Cortana’s name stems from a character in a video game (Halo) who is overtly sexualized. Big yikes. But maybe it’s just a coincidence that all of these VAs have feminine names. What’s so wrong with that?

Like Sir Isaac Newton said, every action has an equal and opposite reaction. The opposite reaction for this? Our vision of women and their roles in our world. Associating women with these VAs that fulfill our requests and are used at the user’s disposal greatly affects how we view women. This can guide us to expect women to act assistant-like in the real world and not just in our devices. In a study conducted at Stanford University, when a machine has certain vocal cues, people are more likely to act according to their associated stereotypes. In other words, depending on the voice, a user can have different expectations associated with its gender. This can also go the other way around as well. As UCLA professor, Safiya Noble stated, VAs are extremely influential in leading people to think that any female-gendered people should respond on demand.

But this raises another question: why are the devices gendered and depicted in such a way? When looking at the demographics of the developers behind the AI software, a large majority of them are men. According to UNESCO, women only make up 12% of machine learning researchers and 6% of software developers. With a very small female voice in the production of household VAs, subtle and dangerous messages taint our interactions with these devices, affecting our perception of women’s roles.

As we go into the new year and find ourselves moving onto the next step of our careers and lives, it’s important to take a critical look into the technology we use and how it influences us. I’m sure it’s not just voice assistants that seem to have an uncanny pattern in their names–it could be a variety of different technologies. Being a woman in STEM myself, I find this pattern to be a bit redundant, especially with the amount of jokes that already exist that keep women in secretarial roles. Because after all, why can’t it be Alex and not Alexa?

References

Schumacher, Clara. “Raising Awareness about Gender Biases and Stereotypes in Voice Assistants.” DIVA, Linköpings University, 20 June 2022, www.diva-portal.org/smash/record.jsf?pid=diva2%3A1672930&dswid=1257.

West, Mark, et al. “I’d Blush If I Could.” Unesdoc.unesco.org, EQUALS and UNESCO, 2019, https://unesdoc.unesco.org/ark:/48223/pf0000367416/PDF/367416eng.pdf.multi.page=1.