Have you ever seen a humanoid design that looks just off? Whether it’s the subtle placement of facial features, an abnormal glint in the eyes, or even just a subconscious lurking feeling that something’s not right, you’ve likely experienced the sensation often described as the uncanny valley.

What is the uncanny valley?

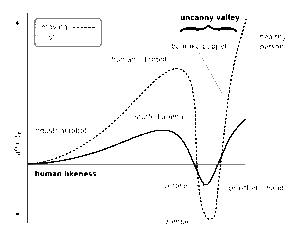

The concept of the uncanny valley was first introduced in the early 1970s by a robotics professor, Masahiro Mori. Mori describes the term as an irregular representative of a linear relationship, stating, “as robots appear more humanlike, our sense of their familiarity increases until we come to a valley.” (Mori et al., 1970) This ‘valley’ he refers to is a point in the relationship between human ‘likeness’ and familiarity in which familiarity plummets before increasing again. Mori designed a graphical relationship indicating this phenomenon, which can be viewed in the image below. This dynamic can be even further exacerbated by attentional human-like cues added, such as movement or touch sensation (Mori et al., 1970).

(Original diagram created by Masahiro Mori, who coined the uncanny valley term in the early 70s.) Source: Wikimedia Commons [https://commons.wikimedia.org/w/index.php?curid=2041097]

The actual origin of the uncanny valley is a question sought after and theorized on by many researchers. A few common hypotheses about its origin have been proposed though. Some examples that will be further discussed below are categorization uncertainty and perceptual mismatch. Unfortunately these hypotheses and actual root of the phenomenon is under researched area, making it difficult to tie a definitive cause (Kätsyri et al., 2025).

Categorical perception deals with one’s predisposition to better perceive something if it distinctly falls into a mental category. This causes things felt to fall into discrete categories to be highlighted in their uniqueness, and those that remain similar to have an understated emphasis on difference (Goldstone & Hendrickson, 2009). The hypothesis is that a cognitive dissonance is created when the uncanny valley is reached in a humanoid design. Essentially, at this stage, there is a difficulty in the mental categorization process, with ambiguity in the design making it hard to determine if the figure falls into the ‘human’ category or the ‘robot/inhuman’ category. This uncertainty is thought to bring the discomforting feeling described by the effect (Kätsyri et al., 2025).

Another hypothesis is rooted in the idea of perceptual mismatch. This theory follows a similar line of reasoning to the categorization uncertainty hypothesis, being that the uncanny valley effect is thought to be brought on by a feeling of discomfort that arrives by the brain’s difficulty to discern something. In this case, it relates to the perceptual confusion brought along by something with high human likeness with a feature that is inhuman or artificial in appearance. The discrepancy between a primarily human perception and an identifying feature that is misaligned with that is thought to create the uncomfortable feeling of uncanniness (Kätsyri et al., 2025).

Uncanny Valley and AI

As time has gone on, the general public has witnessed the rapid development of technology. With our world’s rising implementation of artificial intelligence (AI) run programs, generative technologies, and interfaces, the question arises, how does this concept intersect with AI? Can the uncanny valley phenomenon expand beyond visual design?

A recent study explores these perceptions in action and how it affects user interaction and emotional responses. Deepali Kishnani, a student at MIT, employed an experimental structure that examined text interactions between participants and 3 variables – a bot engineered to be ‘human-like’, a bot engineered to be ‘uncanny-like’, and a human for control. After conversing with one of the 3, the participant ranked the system on anthropomorphism, animacy, likeability, perceived intelligence, and safety (Kishnani, 2025).

Based on the rating and analysis of the results, it was concluded that there is evidence that the uncanny valley is present in text-based interaction, as the ‘uncanny-like’ bot was consistently rated lowest on all dimensions. Findings also revealed that people are having an increasingly more difficult time distinguishing human interaction from AI interactions, as 60% of participants in the human control group incorrectly identified their chat companion as AI (Kishnani, 2025).

With the now confirmed presence of possible uncanny valley in AI chatbot interactions, an additional question of how it influences trust from users arises. Another study explores this concept, seeking how the anthropomorphism of AI systems induces concern into the patron, instigating fear of human-natured negative actions onto the inhuman bot (Kim et al., 2024). This study specifically introduces a concept known as ‘uncanny valley of the mind,’ which focuses more on human-like intentions rather than human-like appearance. They suggest that the uncanny valley of the mind is brought on when a chatbot is perceived to have human-like mentalites (also known as a ‘digital mind’), they will also possess human-like intentions- such as the ability to misuse information (Kim et al., 2024).

To test this phenomenon, researchers created 2 chatbots that could be accessed through facebook messenger, one ‘anthropomorphized’ and one ‘non-anthropomorphized.’ Through a series of interactions, the bot asked for personal information and later synthesized either an ad containing that information or a generic ad without it. Interestingly, when the anthropomorphized bot presented the user with a personalized ad, participants reported increased privacy concerns. This effect was not present in the non-anthropomorphized ads, regardless of it being the personalized or non-personalized ad. With this being said, it was determined that higher anthropomorphism in chatbots decreases consumer trust/likelihood to purchase a product when personal information is included in the pitch (Kim et al., 2024).

What Does this Mean for the Future of AI?

It seems that as the days go on, more applications of AI are created and present in a multitude of functions. As implementations into features like writing programs, customer service, and ad-personalization grows, opportunity for distrust in these systems increases along with it. The research above proves that the uncanny valley can seep into perceptions of these AI powered systems, so how will producers handle this realization?

In my opinion, there are two ways this industry can progress to mediate this issue from a psychological standpoint. Either producers will make efforts to develop AI systems that are less uncanny and more perceptual humans or consumers will begin to gravitate away from trust. Historically both of these things have occurred in product development and examples regarding the uncanny valley can even be observed.

A great exemplification of both of these mediations is the design of ‘Sonic the Hedgehog’ in the 2020 film rendition. When the first trailer released, fans of the franchise were horrified by the uncanny design of their beloved main character. Sonic, a very cartoonish character, had been adapted to his life action role by combining realistic CGI texturing and a flurry of human-like features on a very non-human creature. The uproar after the release was instant, with many fans taking to social media to detest the movie and state their refusal to endorse it. (Alexander, 2020) This is an example of the consumers gravitating away from trust. Producers were then forced to change the design to be more appealing and cartoonish, exemplifying the need for producer design in a way that is more appealing to consumers.

The true outcome of how AI develops can only be told by time. Until then, be a critical consumer. Do you find yourself experiencing a lurking, unsettling, off feeling when chatting with AI systems?

References:

Alexander, B. (2020, February 17). How “Sonic the Hedgehog” avoided death by Twitter with a dramatic, game-saving redesign. USA Today. https://www.usatoday.com/story/entertainment/movies/2020/02/13/sonic-the-hedgehog-movie-redesign-how-backlash-made-character-better/4730593002/

Goldstone, R. L., & Hendrickson, A. T. (2009, December). Categorical perception. WIREs Cognitive Science. https://wires.onlinelibrary.wiley.com/doi/10.1002/wcs.26

Kim, W., Ryoo, Y., & Choi, Y. K. (2024). That uncanny valley of mind: When anthropomorphic ai agents disrupt personalized advertising. International Journal of Advertising, 1–30. https://doi.org/10.1080/02650487.2024.2411669

Kishnani, D. (2025, March 7). The uncanny valley: An empirical study on human perceptions of AI-generated text and images. The Uncanny Valley: An Empirical Study on Human Perceptions of AI-Generated Text and Images. https://dspace.mit.edu/handle/1721.1/159096

Kätsyri, J., Förger, K., Mäkäräinen, M., & Takala, T. (2025, November 1). A review of empirical evidence on different uncanny valley hypotheses: Support for perceptual mismatch as one road to the valley of eeriness. Frontiers. https://www.frontiersin.org/journals/psychology/articles/10.3389/fpsyg.2015.00390/full#B19

Mori, M. (1970). The Uncanny Valley. https://www.almendron.com/tribuna/wp-content/uploads/2018/01/morunc.pdf